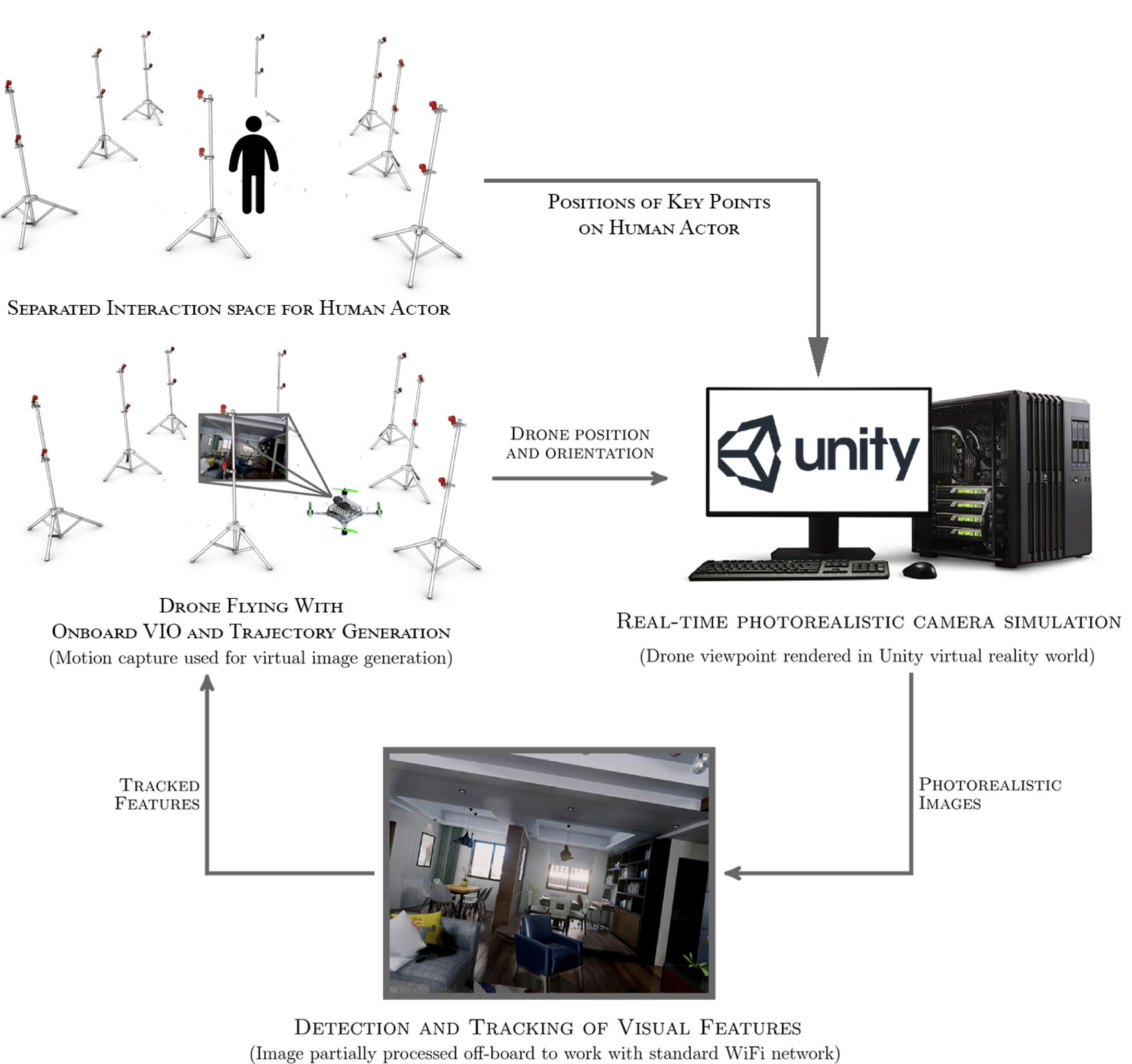

In this scenario, we describe utilizing the FlightGoggles simulator with agent(s) in the loop using motion capture. We replace the dynamics simulation nodes with pose information from motion capture. An illustrative diagram of a typical visual inertial navigation scenario with FlightGoggles is shown in Fig 1.

Fig 1. Dataflow for using Virtual Reality Simulation with motion capture for visual inertial navigation.

Motion Capture

We recommend using our OptiTrack driver that can be found here: https://github.com/mit-fast/OptiTrack-Motive-2-Client. In this driver, we publish the pose of the agent to tf with the frame /uav/imu with respect to the global frame mocap.

ROS Launch file

To run this example application, please run roslaunch flightgoggles mocapExample.launch. The launch file starts with the arguments ignore_collisions which disables publication of collisions and render_stereo which optionally enables stereo rendering.

<launch> <arg name="ignore_collisions" default="false" /> <arg name="render_stereo" default="0" />

Next, we launch the OptiTrack ROS node

<node name="optitrack" pkg="optitrack_motive_2_client" type="optitrack_motive_2_client_node" args="--server 192.168.1.12 --local 192.168.1.103" />

And, finally we can launch the core flightgoggles client node

<include file="$(find flightgoggles)/launch/core.launch">

<arg name="render_stereo" value="$(arg render_stereo)"/>

<arg name="ignore_collisions" value="$(arg ignore_collisions)"/>

</include>

</launch>