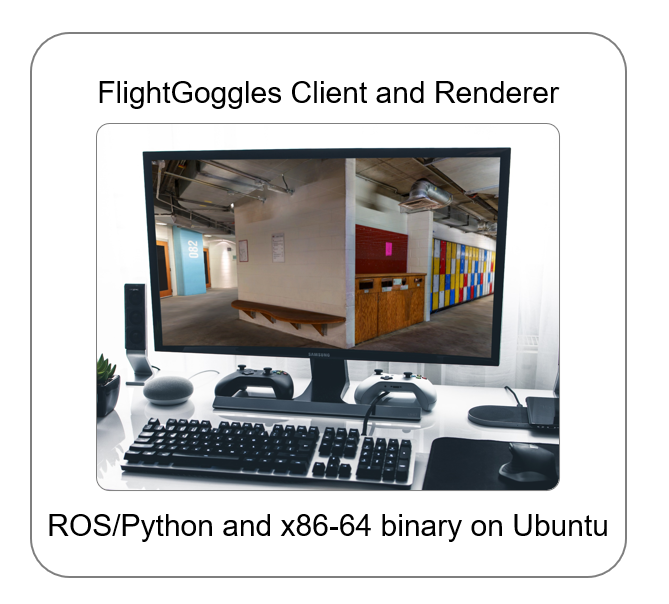

FlightGoggles consists of two parts:

The FlightGoggles Renderer functions as a back-end that simulates exteroceptive sensors, such as cameras and range measurement sensors (such as LiDARs), and is based on Unity3D.

We provide the Renderer as a binary for Ubuntu and Windows. Additionally, its Unity source code is open and so are the photorealistic assets generated using photogrammetry.The FlightGoggles Client is the front-end part of the system. It enables users to set simulation and rendering settings, and provides the vehicle poses for sensor simulation to the renderer based on simulation or motion capture data. The Client also includes a library for simulation of multicopter and car dynamics, as well as IMU measurements.

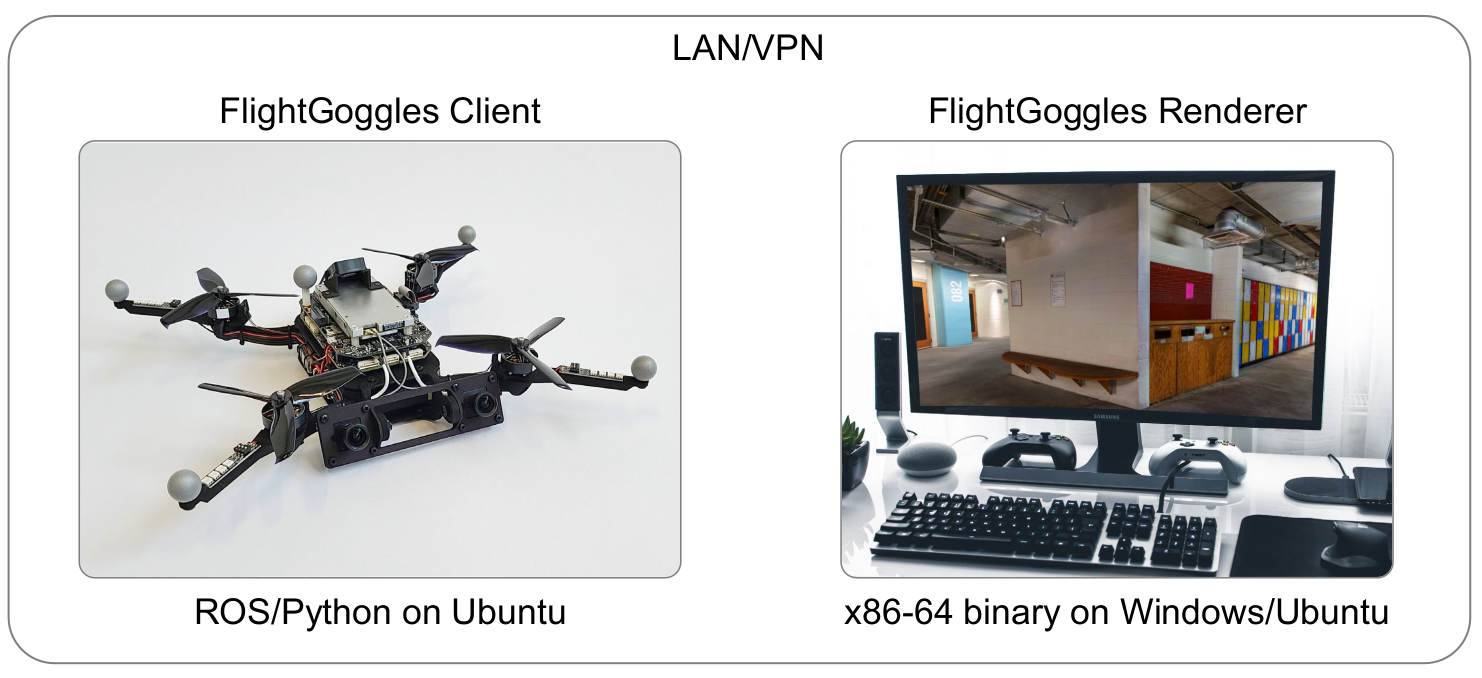

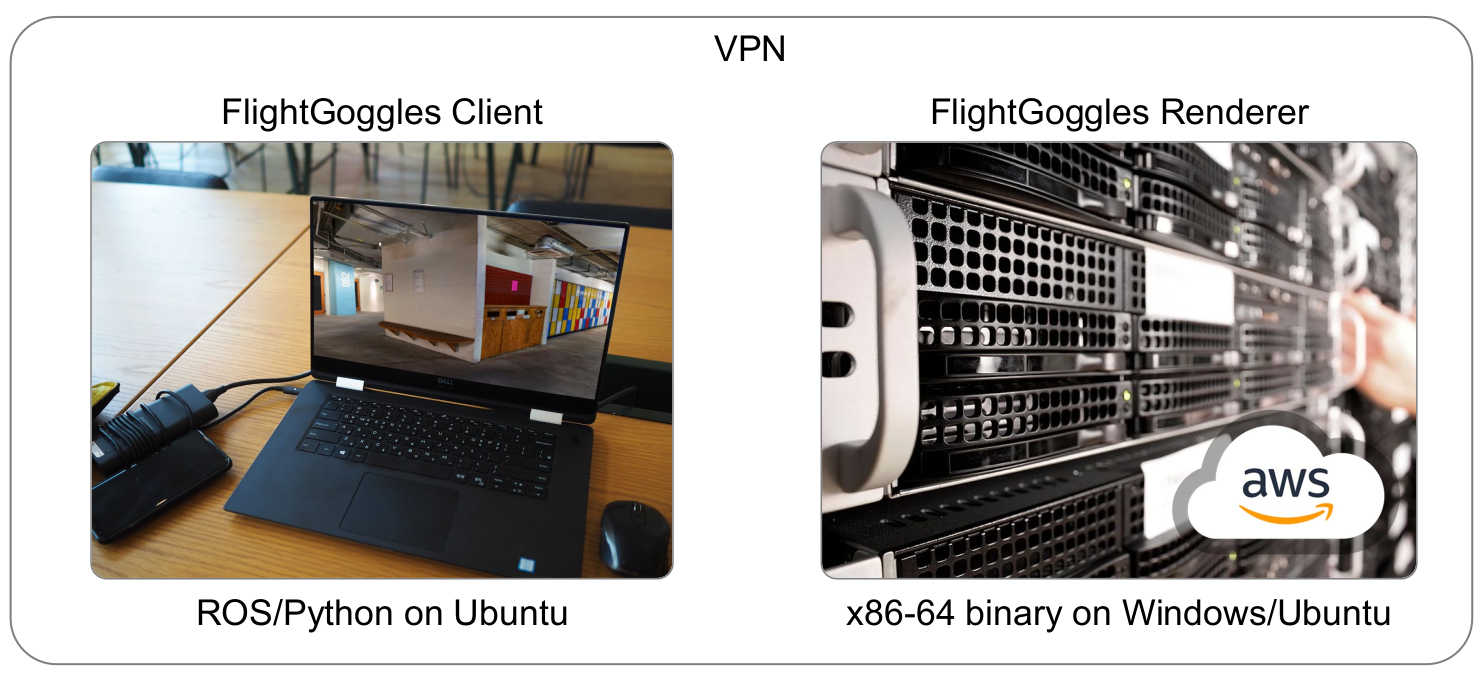

The Renderer and Client can run on separate computers connected to a local network or a virtual private network (VPN). For example, for hardware-in-the-loop experiments the Renderer can be run on a desktop computer, while the Client runs on a mobile computing platform. The Renderer can also run in the cloud, which we have verified using Amazon Web Services (AWS).

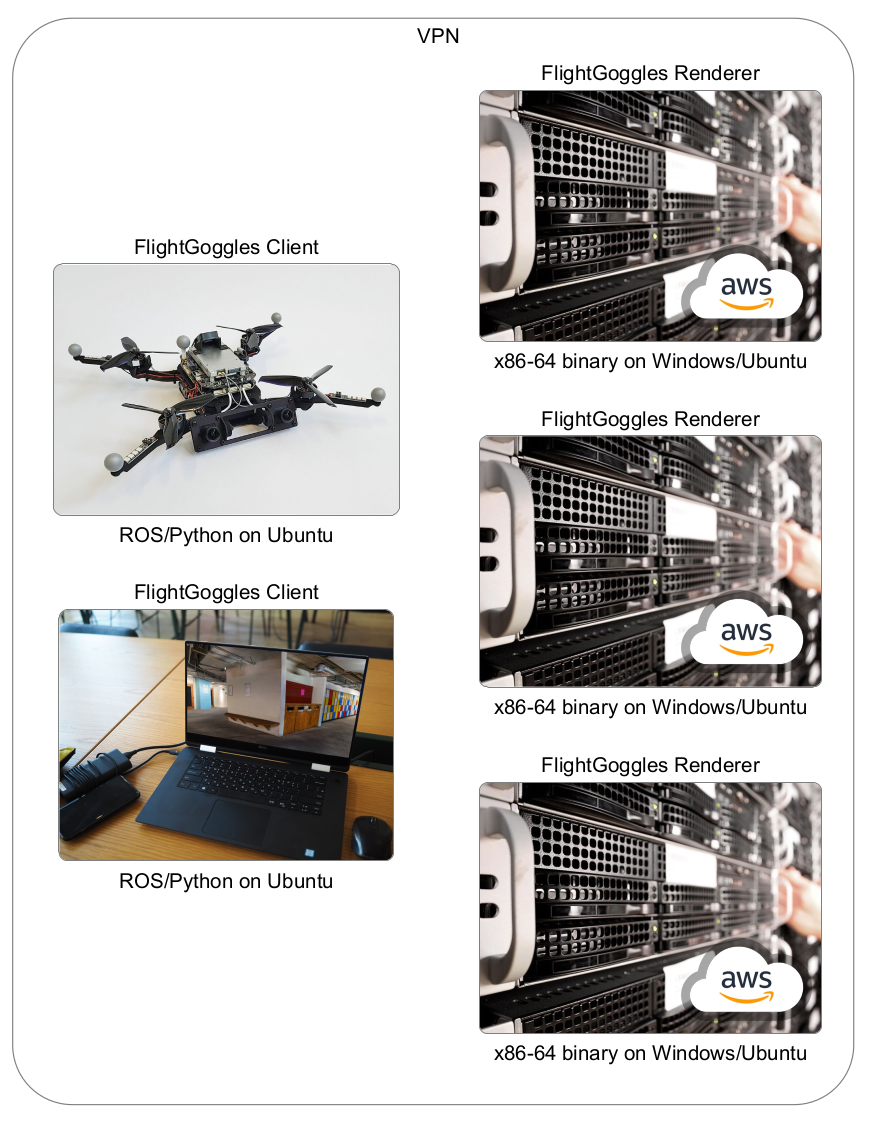

It possible to run multiple instances of the Client and/or Renderer. This can be useful when simulating multiple vehicles or multiple cameras. For example, when simulating a stereo camera, the image of each sensor can be rendered on a separate computer/AWS instance. This enables distribution of the computation load. You can simply install the Client or Renderer on each system as needed, and follow the configuration instructions in ROS Interface or Python Interface.